Shifting 'Shift Left' and What We Can Learn from Uber

Developers dislike the "shift left" approach. It's not because they hate security but because they want to ship code. We are asking a lot of developers, and it's okay; we like a challenge and we get paid a lot. What we would like is a CI system that doesn't keep us from merging perfectly good code. Imagine wrapping up an issue on a Friday afternoon after a grueling crunch to deliver a feature, only to have your CI fail. It's frustrating, and sometimes, we're even told to disable tests just to get code out the door, cough Volkswagen cough.

Over the past seven years, I've been deep in the trenches of DevSecOps. The default playbook has been to halt developers at every security or test failure. On the surface, stopping production when we detect a quality issue seems logical, it's a principle borrowed from lean manufacturing and the Toyota Production System. The idea is to ensure quality and reduce rework by catching issues early. But maybe it's time we rethink this. Maybe treating digital assets the same way we treat durable goods isn't the best approach.

Rework is costly, in time and materials with physical goods or when you have slow distribution, such as manually updating a ROM on a fleet of vehicles. However, does stopping work make sense when deploying to an online system with incremental rollouts?

As a founder of a software company, I need the code to deliver value as quickly as possible, it is the only way we will survive. It makes sense to slow deployments for costly systems to deploy to—think embedded systems, packaged software, and physical goods. However, as the deployment cost decreases, the need to stop production to fix quality issues also decreases. In fact, with fast systems, as long as we provide visibility to the developers, prevent non-compliant systems from deploying, and implement some sort of incremental rollout (please at least one machine), we should never block CI. The only block on CI should be for human code review and build failure.

Common Limitations of DevSecOps Systems

Products such as GitHub, GitLab, CircleCI, Jenkins and friends are amazing at what they do. I have used most of them in anger, they all do pretty much the same thing. Orchestrate software builds, deployments, and testing. However, they were are built before the world woke up to Software Supply Chain attacks and the Log4Shell CVE. It was a different world back then. Most organizations didn’t see open source as a security risk, there was no body of knowledge around securing developer environments and the impact a break there could have.

This forced us DevOps engineers to shove test after test into the pipeline. Some wizards could craft some efficient pipelines, but they all looked something like this.

Most organizations block CI on test failure. Stopping code from delivering value.

A single pipeline, or set of pipelines, glues together. If everything magically passes you are granted access to production infrastructure. The problem is that pipelines break all of the time, and it forces anti-patterns such as combining CI and CD into a single pipeline (aside: see this amazing talk by Adobe and AutoDesk on the benefits on separating these two concerns) If you are blocking on high/critical CVEs the dev may have to stop feature work for a CVE that doesn’t even matter. We do all of this in the name of security and compliance.

The thing is, when a CEO has to weigh compliance, security, and revenue, revenue always wins. If complying costs us revenue, there better be a clear payoff. As a result, developers are incentivized to meet tight deadlines by bypassing or disabling testing to ship. Security often takes a backseat, creating a cycle where vulnerabilities slip through unnoticed. While this may provide short-term gains in delivery speed, it exposes organizations to long-term risks like software supply chain attacks or compliance penalties. The perfect system we build falls apart for all but the most regulated industries.

The alternative is creating very restrictive pipeline templates that are forced to execute cleany before software is “allowed” to deliver value.

The issue lies in how traditional DevSecOps systems enforce security. They inadvertently create bottlenecks by placing tests as rigid gatekeepers in the CI/CD pipeline. When a pipeline breaks, progress halts, and developers lose valuable time investigating false positives or minor issues. In many cases, the CVEs being flagged might not even be exploitable in the application's context, yet they block critical deployments.

This "all-or-nothing" approach to testing frustrates developers and weakens trust in the tools. Instead of empowering teams to deliver secure, high-quality software, it creates an adversarial relationship between security and development teams.

Insights from KubeCon and the DORA Metrics

I am writing my first draft of this post on a flight back from KubeCon. KubeCon draws over 10,000 platform engineering enthusiasts twice yearly. If you want to understand how to build better platforms, KubeCon is the place to go.

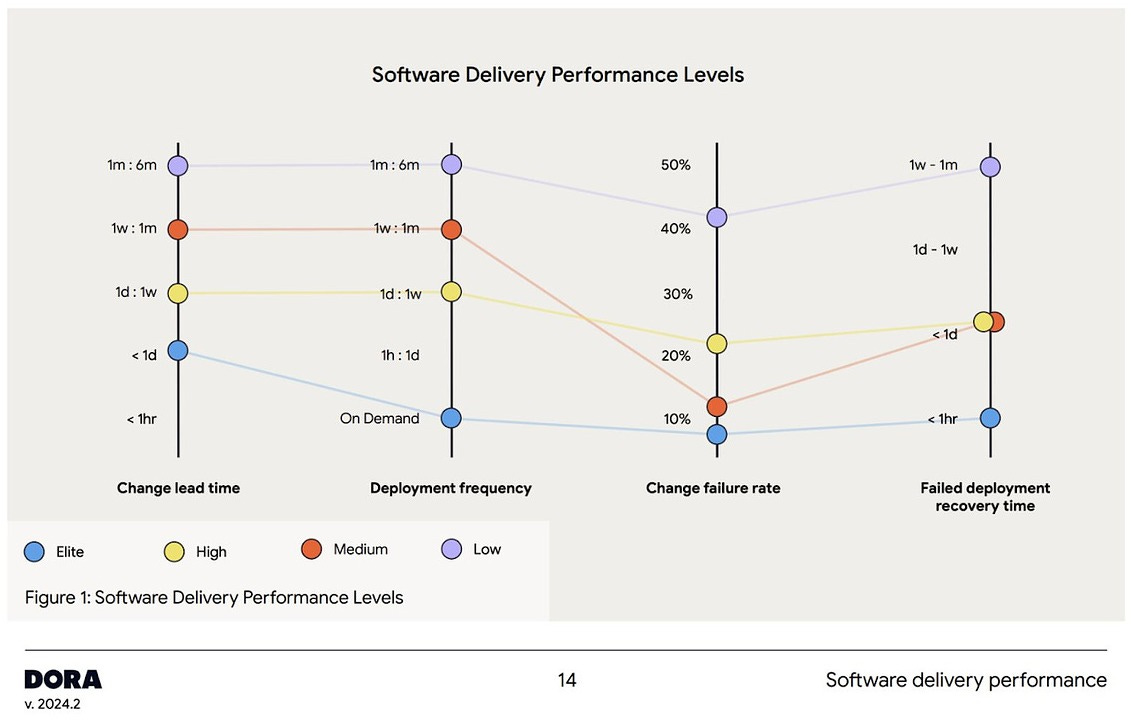

You may have seen my article about the inversion in the DORA metrics' "change failure rate." According to DORA theory, high performers (deploying once a day) should have a lower "change failure rate" than those that only deploy once per week. The idea is that organizations that are organized enough to deploy frequently have fewer failures.

DORA metrics provide a framework for measuring software delivery performance, helping organizations align development speed with reliability and quality.

Deployment Frequency: How often an organization successfully releases to production.

Lead Time for Changes: Time from code committed to code running in production.

Change Failure Rate: Percentage of deployments causing a failure in production.

Time to Restore Service: Time taken to recover from a production failure.

Key Insight:

High-performing teams excel in these metrics, driving improved business outcomes such as profitability, productivity, and customer satisfaction. They ship code faster and more reliably, with fewer failures, showcasing the balance between speed and quality.

On my first day, at the first talk I attended at the co-located startup fest, I had the luck of sitting right next to Lucy Sweet. I had never met Lucy before, but I looked at her badge, it said Uber. Uber is probably the best company at CI/CD. Uber never goes down, and they deploy 34,000 times a week worldwide. They are the definition of a DORA elite performer. My guess is that their developers are not encouraged to disable or bypass tests.

I asked her why this inversion is not happening with elite performers such as Uber. I don't think I have ever seen somebody so excited to tell their story.

Learning from Uber's Deployment Model

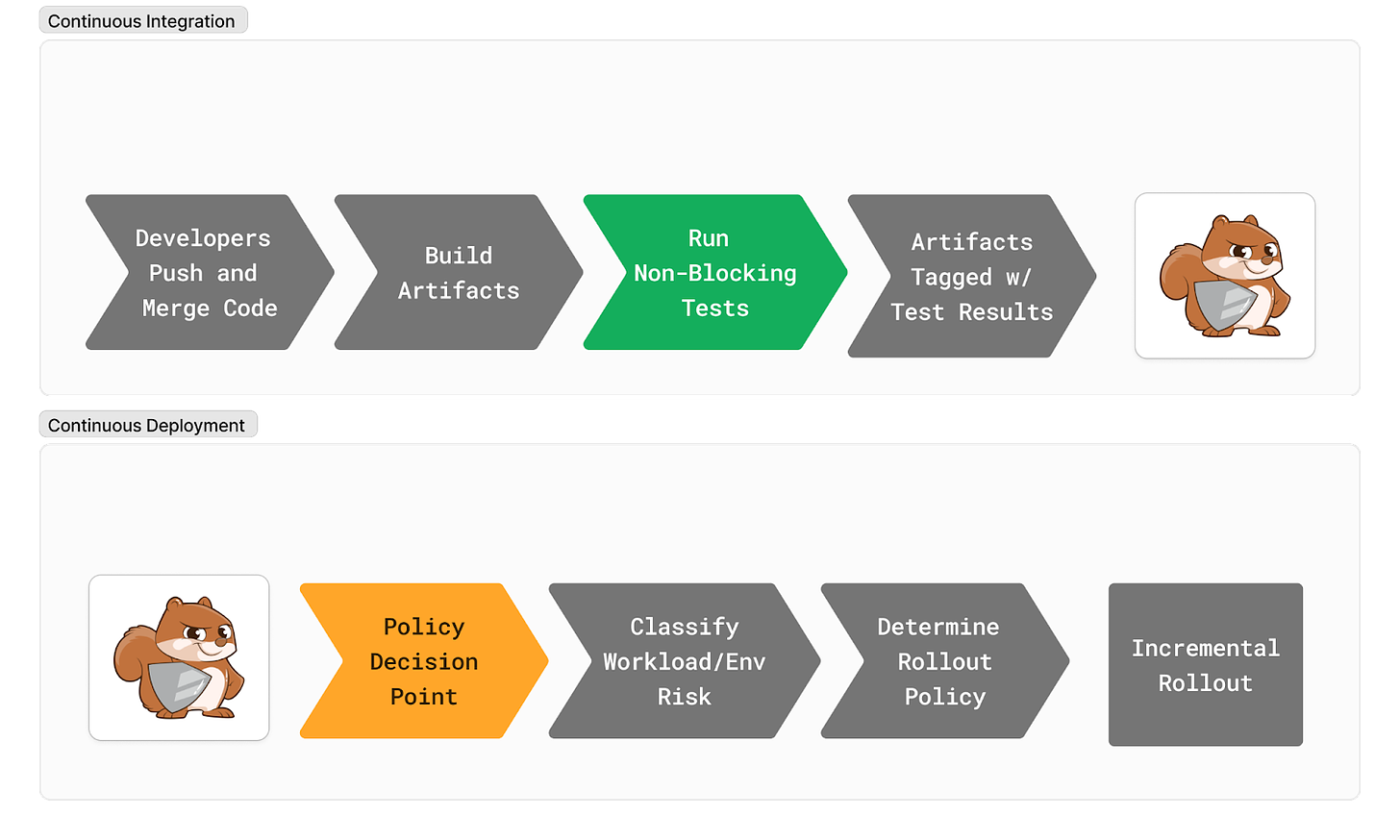

Uber takes a different approach to security. Instead of creating pipelines that are 15 steps long and fail 50% of the time due to flakiness, they defer the policy decision to the CD system. This allows developers to continue to push, merge, and review code while allowing the platform team to decide if the workload is safe to deploy - and providing feedback in the form of induced friction.

Uber uses incremental rollouts on each of their 34,000 deployments a week. Workloads build up trust over time and, combined with provenance data from tests against the codebase, classify the workload ranging from "unsecured" to "uber-secure." Workloads that are classified as uber-secure have the fastest rollout policy. They reduce the friction between the developer and production as much as possible. However, workloads that do not pass some of the required security tests are given the most restrictive rollout policy. Uber created a system that incentivizes good behavior.

“Every test case is automatically registered in our datastore on land. Analytics on historical pass rate, common failure reasons, and ownership information are all available to developers who can track the stability of their tests over time. Common failures are aggregated so that they can be investigated more easily”. - https://www.uber.com/en-DK/blog/shifting-e2e-testing-left

Uber's approach to continuous deployment shows us what's possible when we rethink traditional practices. Uber empowers developers to push, merge, and review code without unnecessary blockers by deferring policy decisions to the CD system and using incremental rollouts. This strategy lets workloads build trust over time, classifying them based on provenance data and tests against the codebase.

The Culture Shift

Shifting the policy enforcement point from CI to CD had a profound impact on Uber's engineering culture. Instead of beating developers over the head with a stick for not passing CI—often due to flakiness or issues beyond their control—Uber has gamified security and reliability engineering. Advancing security from "unsecured" to "uber-secure" feels rewarding; developers don't want to be at level one for security—they aim to be the best. This approach incentivizes good behavior and fosters a positive engineering culture.

However, until tools like Witness and Archivista were available, organizations didn't have the means to defer policy enforcement effectively. Pipeline blocks have traditionally been the only way to ensure quality, forcing developers to address issues before code could proceed. By moving policy enforcement to the CD stage, we provide visibility and maintain high standards without grinding the development process to a halt.

Rethinking Traditional DevSecOps Practices

Pipeline blocks are with good intentions. Previously, tracking the provenance of these CI steps has not been possible using off-the-shelf tools. You needed to build custom systems to do this. Uber's model flips the script on the traditional "shift left" mentality. Instead of bogging down the CI pipeline with endless checks that fail half the time due to flakiness, they allow the platform team to make deployment decisions based on the workload's security posture. This doesn't mean security is an afterthought; it's integrated into the deployment process in a way that doesn't impede progress.

It's time we stop treating digital assets like durable goods. Rework is tough with physical products, but in software, especially with systems that support rollouts, we can afford to be more flexible. Blocking CI for every test or security failure might not be the best approach anymore. Instead, we should focus on providing visibility to developers and preventing non-compliant systems from deploying without grinding the entire process to a halt or encouraging bad behavior.

What are Witness and Archivista? Witness and Archivista are tools that enable organizations to gather and store compliance evidence throughout the development and deployment process. Witness captures provenance data, while Archivista stores this data, allowing teams to defer policy enforcement from earlier CI stages to later in the CD pipeline, ensuring compliance without halting development progress.

A Case Study

Autodesk has embraced Witness and Archivista to defer policy decisions until the CD phase. This strategy allows product development to move forward without hitting compliance roadblocks, ensuring only compliant artifacts make it into production. By generating Trusted Telemetry with Witness and storing it in Archivista, they gain deep insights into the compliance and security status of every artifact ever built. Patterns like this have even helped them achieve FedRAMP ATO

.

Autodesk leverages the CNCF in-toto project's Witness CLI tool, following recommendations in NIST 800-204D, to generate attestations throughout their SDLC. By instrumenting CI steps, developers can customize their pipelines while still using core libraries that inject platform tooling to produce cryptographically signed metadata. These attestations are stored in Archivista, allowing Autodesk to query and inspect metadata via a graph API. This setup enables them to trace artifacts back to their source, ensuring integrity and facilitating policy decisions during the CD phase. For instance, if a suspicious binary is discovered, they can use the stored attestations to determine if it was built on their systems and identify the associated change request.

Join Our Community

If this resonates with you, it's worth considering how we can apply these insights to your development and deployment processes. By rethinking how you handle CI/CD pipelines and security checks, you can reduce friction between developers and production, allowing for faster value delivery without compromising security.

Adopting strategies like Uber's can help reduce engineering and compliance costs. It enables teams to maintain high-security standards while delivering features and updates more efficiently. We are working on problems like these at TestifySec and the in-toto community. Read our blog, join us on CNCF Slack, or let us know if you would like to see what a compliance platform that developers love looks like.

References

Uber. (n.d.). Continuous deployment for large monorepos. Uber Engineering Blog. Retrieved from https://www.uber.com/en-AU/blog/continuous-deployment/

Uber. (n.d.). Shifting E2E testing left at Uber. Uber Engineering Blog. Retrieved from https://www.uber.com/en-DK/blog/shifting-e2e-testing-left/

Toyota. (n.d.). Toyota Production System. Toyota Global. Retrieved from https://global.toyota/en/company/vision-and-philosophy/production-system/